https://www.brentozar.com/archive/2013/09/iops-are-a-scam/

Storage vendors brag about the IOPS that their hardware can provide. Cloud providers have offered guaranteed IOPS for a while now. It seems that no matter where we turn, we can't get away from IOPS.

WHAT ARE YOU MEASURING?

When someone says IOPS, what are they referring to? IOPS is an acronym for Input/Output Operations Per Second. It's a measure of how many physical read/write operations a device can perform in one second.

IOPS are relied upon as an arbiter of storage performance. After all, if something has 7,000 IOPS, it's gotta be faster than something with only 300 IOPS, right?

The answer, as it turns out, is a resounding "maybe."

Most storage vendors perform their IOPS measurements using a 4k block size, which is irrelevant for SQL Server workloads; remember that SQL Server reads data 64k at a time (mostly). Are you slowly getting the feeling that the shiny thing you bought is a piece of wood covered in aluminum foil?

Those 50,000 IOPS SSDs are really only going to give you 3,125 64KiB IOPS. And that 7,000 IOPS number that Amazon promised you? That's in 16KiB IOPS. When you scale those numbers to 64KiB IOPS it works out to 1,750 64KiB IOPS for SQL Server RDS.

LATENCY VS IOPS

What about latency? Where does that fit in?

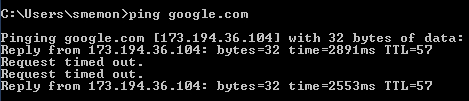

Latency is a measure of the duration between issuing a request and receiving a response. If you've ever played Counter-Strike, or just run ping, you know about latency. Latency is what we blame when we have unpredictable response times, can't get to google, or when I can't manage to get a headshot because I'm terrible at shooters.

Why does latency matter for disks?

It takes time to spin a disk platter and it takes time to move the read/write head of a disk into position. This introduces latency into rotational hard drives. Rotational HDDs have great sequential read/write numbers, but terrible random read/write numbers for the simple reason that the laws of physics get in the way.

Even SSDs have latency, though. Within an SSD, a controller is responsible for a finite number of chips. Some SSDs have multiple controllers, some have only one. Either way, a controller can only pull data off of the device so fast. As requests queue up, latency can be introduced.

On busy systems, the PCI-Express bus can even become a bottleneck. The PCI-E bus is shared among I/O controllers, network controllers, and other expansion cards. If several of those devices are in use at the same time, it's possible to see latency just from access to the PCI-E bus.

What could trigger PCI-E bottlenecks? A pair of high end PCI-E SSDs (TempDB) can theoretically produce more data than the PCI-E bus can transfer. When you use both PCI-E SSDs and Fibre Channel HBAs, it's easy to run into situations that can introduce random latency into PCI-E performance.

WHAT ABOUT THROUGHPUT?

Throughput is often measured as IOPS * operation size in bytes. So when you see that a disk is able to perform X IOPS or Y MB/s, you know what that number means – it's a measure of capability, but not necessarily timeliness. You could get 4,000 MB/s delivered after a 500 millisecond delay.

Although throughput is a good indication of what you can actually expect from a disk under perfect lab test conditions, it's still no good for measuring performance.

Amazon's SQL Server RDS promise of 7,000 IOPS sounds great until you put it into perspective. 7,000 IOPS * 16KiB = 112,000 KiB per second – that's roughly 100MBps. Or, as you or I might call it, 1 gigabit ethernet.

WHAT DOES GOOD STORAGE LOOK LIKE?

Measuring storage performance is tricky. IOPS and throughput are a measurement of activity, but there's no measure of timeliness involved. Latency is a measure of timeliness, but it's devoid of speed.

Combining IOPS, throughput, and latency numbers is a step in the right direction. It lets us combine activity (IOPS), throughput (MB/s), and performance (latency) to examine system performance.

Predictable latency is incredibly important for disk drives. If we have no idea how the disks will perform, we can't predict application performance and have acceptable SLAs.

In their Systematic Look at EC2 I/O, Scalyr demonstrate that drive latency varies widely in EC2. While these numbers will vary across storage providers, keep in mind that latency is a very real thing and it can cause problems for shared storage and dedicated disks alike.

WHAT CAN WE DO ABOUT IOPS AND LATENCY?

The first step is to make sure we know what the numbers mean. Don't hesitate to convert the vendor's numbers into something relevant for your scenario. It's easy enough to turn 4k IOPS into 64k IOPS or to convert IOPS into MB/s measurements. Once we've converted to an understandable metric, we can verify performance using SQLIO and compare the advertised numbers with real world numbers.

But to get the most out of our hardware, we need to make sure that we're following best practices for SQL Server set up. Once we know that SQL Server is set up well, it's also important to consideradding memory, carefully tuning indexes, and avoiding query anti-patterns.

Even though we can't make storage faster, we can make storage do less work. In the end, making the storage do less gets the same results as making the storage faster.